Entropy: Basics and Markov Sources

Advertisement

This page describes the basics of entropy and presents the entropy equation for Markov sources. It also clarifies the differences between bits, decits, and nats.

- The information content in a signal is high if its probability of occurrence is low.

- If the probability of occurrence of a message is p_, then its information content _I is defined as follows.

Difference between Bits vs Decit vs Nat

The following equations highlight the difference between Bits, Decits, and Nats:

I = log2(1/p)… BitsI = log10(1/p)… Decit or HartleyI = ln (1/p)… nat

Entropy Basics

- The average information content per symbol in a group of symbols is known as entropy and is represented by H.

- If there are M symbols, and their probabilities of occurrence are p1, p2, …, pi, …, pM_, then entropy _H is expressed as follows:

H = SUM(from i=1 to i=M) pi*log2(1/pi) bits/symbol

Entropy will be maximum when the probability of occurrence of all M symbols is equal. In this case, H max = log2(M) bits/symbol.

If a data source is emitting symbols at a rate rs (symbols/sec), then the source information rate R is given by:

R = rs * H (bits/sec)

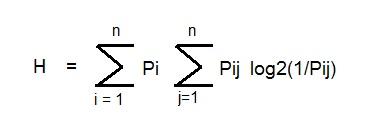

Entropy of Markov Sources

The entropy of a Markov source is expressed as follows:

Where:

- pi is the probability that the source is in state i.

- Pij is the probability when it is going from state i to state j.

A Markov source is a source that emits symbols dependently.

Advertisement

RF

RF