What is Edge AI and how edge AI works

Advertisement

Introduction : In a cloud only setup, every inference requires constant data transmission to centralized servers which causes high latency, heavy bandwidth usage, and increased leasing and operation costs. This reliance also raises privacy and security concerns, as sensitive data is vulnerable during transmission and storage on third party infrastructure or servers. Moreover, connectivity can not be guaranteed in remote or mobile environments, making cloud AI brittle when networks fail. These limitations have highlighted the need to move intelligence closer to data source which has lead to the development of Edge AI devices.

What is Edge AI?

As mentioned Edge AI emerged because cloud based AI couldn’t reliably meet demands for speed, privacy, cost efficiency, offline continuity and system resilience. It transforms AI from remote, centralized infrastructure to intelligent, autonomous devices; enabling truly real time, secure and scalable applications across industries.

Edge AI stands for Edge Artificial Intelligence. It refers to the deployment of AI algorithms and models directly on edge devices like smartphones, IoT devices, sensors, cameras, drones and embedded systems. The edge AI devices do not rely on centralized cloud servers. These devices process data locally, at or near the source of data generation (called the “edge” of the network). This enables faster decision making and reduced latency.

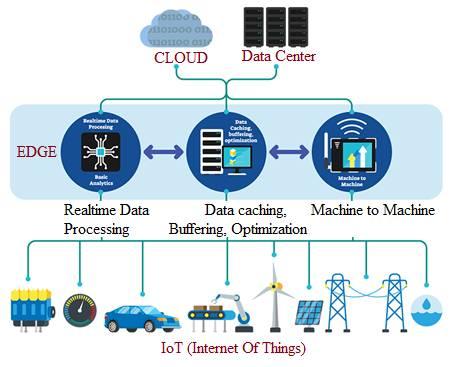

The figure depicts edge computing architecture followed by edge AI devices. Edge AI devices typically follow layered edge computing architecture.

How edge AI works?

Edge AI involves the following key components and workflow. Let us understand working of edge AI through following steps.

- Step-1 : Data Collection : Sensors or embedded systems on edge devices capture raw data like video, sound, temperature, etc.

- Step-2 : Local AI Processing : Pre-trained AI models are stored on the device. Examples include object detection, speech recognition etc. These models are optimized for low power and real time inference using lightweight frameworks such as TensorFlow Lite, OpenVINO and ONNX. The device processes data locally using the onboard AI processor.

- Step-3 : Real-Time Inference and Decision : Based on the AI model’s inference, the device can make immediate decisions, such as detecting an object, raising an alert or controlling a machine. This reduces dependence on cloud or internet connectivity.

- Step-4 : Optional Cloud Communication : Only selected data or summaries are sent to the cloud for long term storage, analysis or model updates. This helps conserve bandwidth and ensures privacy.

Example Use Case (smart surveillance camera)

In such camera, Edge AI offers following features.

- Real time face or motion detection directly on the camera

- Instant alerts to security personnel

- Does not require to uploading entire video streams to the cloud

Ericsson solutions

Ericsson plays a central role in enabling Edge AI by combining its robust 5G/SD‑WAN infrastructure with AI‑ready compute platforms and tools. The integrated solutions from Ericsson empower enterprises to deploy intelligent, low latency and highly secure AI applications right at the network edge.

Enterprise Wireless Solutions from Ericsson include 5G routers viz. R980 and S400. These routers integrate with their NetCloud platform to deliver secure and scalable connectivity for Edge AI scenarios. Ericsson works with various research institutions to develop AI based solutions and products. Joint alliances help in building groundwork for future wireless networks such as 6G.

Conclusion:

By shifting AI to the device level, Edge AI enables real time inference, enhanced privacy, lower latency and bandwidth use; transforming how intelligent systems interact with the world.

Advertisement

RF

RF